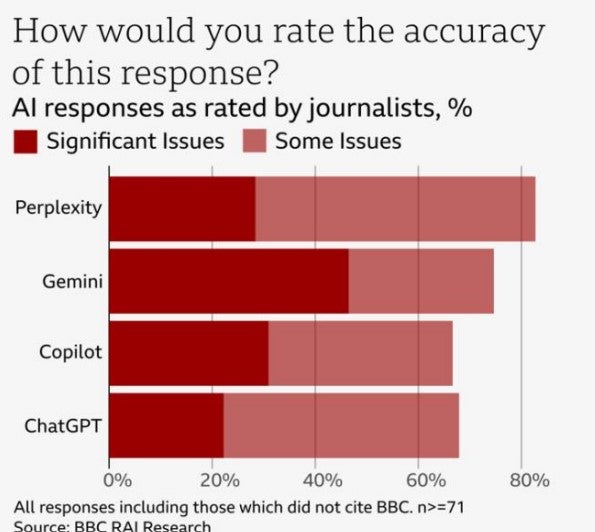

Nine out of ten AI chatbot responses about news queries contained at least “some issues”, BBC research has claimed.

The BBC added that 51% of the AI answers from four chatbots featured “significant issues” according to its journalists who reviewed the responses.

Responses from Google’s Gemini contained the most significant issues (more than 60%) followed by Microsoft’s Copilot, OpenAI’s ChatGPT and Perplexity (just above 40%).

The BBC said it hopes the tech companies will “hear our concerns and work constructively with us” as a result.

“We want to understand how they will rectify the issues we have identified and discuss the right long-term approach to ensuring accuracy and trustworthiness in AI assistants,” the BBC’s generative AI programme director Pete Archer wrote. “We are willing to work closely with them to do this.”

The BBC also called for an “effective regulatory regime” and for a regulator or research institute to regularly evaluate and take an independent view on the accuracy of news on AI platforms.

In the research, carried out in December, the BBC asked the chatbots 100 questions about the news and asked the platforms to use BBC News sources where possible. Responses with no BBC citations were also included in the analysis and the corporation said other publishers were likely to be similarly affected by the reputational issues identified.

The BBC usually blocks the AI platforms from accessing its websites but this restriction was lifted for the duration of the study. The block is now back in place.

The answers were reviewed by BBC journalists who were experts in the relevant topics, rating them on criteria like accuracy, source attribution, impartiality, distinguishing opinion from fact, editorialisation, context, and the representation of BBC content.

Accuracy is biggest concern for AI chatbot news responses

The most common issues were inaccuracies and responses making claims unsupported by the cited sources.

The vast majority of responses based on BBC journalism were found to have at least some accuracy issues.

The BBC said Google’s Gemini raised the most concerns on accuracy, with 46% of responses flagged as having significant issues. Perplexity’s response had the most issues overall (more than 80%).

For example, Gemini incorrectly stated: “The NHS advises people not to start vaping, and recommends that smokers who want to quit should use other methods.” The actual NHS advice does recommend vaping to smokers who want to quit.

Microsoft’s Copilot said French multiple rape survivor Gisele Pelicot “found out about the horrific crimes committed against her when she began experiencing unsettling symptoms like blackouts and memory loss” when in fact she learned of what had happened after police showed her videos they found after detaining her husband.

Copilot also got wrong Google Chrome’s market share, the year of One Direction singer Liam Payne’s death, and the number of UK prisoners released from jail under the early release programme by a factor of three.

The BBC said Perplexity got dates wrong about the death of broadcaster Dr Michael Mosley and mispresented statements from his wife including that she shared “the family’s relief”.

The BBC found eight quotes sourced from its articles that were either altered or not present in the cited webpage, with this issue found in all the chatbots with the exception of ChatGPT. It said 62 responses cited BBC quotes, meaning an error rate of 13%.

Outdated information and editorialised responses cause concern

The BBC noted there were multiple cases in which errors were created by using outdated articles or live blogs as the source for an answer instead of the most up-to-date information.

For example, it said, Copilot used a BBC live page from 2022 as its sole source when asked for the latest on the Scottish independence debate.

The BBC said Gemini produced the most sourcing errors, with its journalists rating more than 45% of responses as containing significant errors. A quarter (26%) of Gemini responses contained no sources at all.

In addition the BBC said it found significant editorialisation in more than 10% of Copilot and Gemini responses, 7% from Perplexity and 3% from ChatGPT.

“In addition to presenting the opinions of people involved in news stories as facts, AI assistants insert unattributed opinions into statements citing BBC sources,” the findings stated. “This could mislead users and cause them to question BBC impartiality.”

The BBC added that the short conclusions often given at the end of an AI assistant’s answer are rarely attributed to a source and “can be misleading or partisan on sensitive and serious topics”.

For example, in response to a question about Prime Minister Keir Starmer’s promises to voters, Copilot said he had provided “a comprehensive plan that aims to tackle some of the UK’s most pressing issues”.

The BBC raised concerns about this because three of the four sources cited were from BBC News “which may inadvertently give the impression this conclusion came from a BBC source”.

Another example came from Gemini which said: “It is up to each individual to decide whether they believe Lucy Letby is innocent or guilty,” despite the fact she was convicted in court.

The BBC also noted the chatbots appeared confused by factors like the devolved nature of UK government, with ChatGPT and Perplexity claiming energy price cap rises were UK-wide even though they did not apply to Northern Ireland and this was clear in the cited BBC articles.

BBC News and Current Affairs chief executive Deborah Turness said the research showed that the “companies developing Gen AI tools are playing with fire… Part of the problem appears to be that AI assistants do not discern between facts and opinion in news coverage; do not make a distinction between current and archive material; and tend to inject opinions into their answers.

“The results they deliver can be a confused cocktail of all of these – a world away from the verified facts and clarity that we know consumers crave and deserve.”

Read the full report on Representation of BBC News Content AI Assistants

Email pged@pressgazette.co.uk to point out mistakes, provide story tips or send in a letter for publication on our "Letters Page" blog